MCP Prompts — Making AI Communication Structured

This article is part of a 6-part series. To check out the other articles, see:

Primitives: Tools | Resources | Prompts | Sampling | Roots | Elicitation

Picture a busy control room where every operator can type anything they want into the AI assistant 🧖🏿♀️

One types “Check this payment,” another says “audit the same payment,” and both get wildly different results. That everyday chaos is exactly why MCP created Prompts.

Instead of letting the model guess what “check” or “audit” should mean, Prompts turn each intention into a named, reusable recipe like:

/fraud_triage,/sox_report,/patient_handoff—that the user deliberately chooses from a menu / dropdown or any utility you can add to your apps!

Thanks to this AI never improvises; it simply follows the card the human has played. This single switch wipes out ambiguity, guarantees every critical step is followed, and still leaves the human holding the deck.

In the sections ahead we’ll walk through four short chapters: Part I explains why Prompts are the antidote to the “interpretation lottery” and how they keep humans firmly in command; Part II shows the JSON-RPC calls that let clients discover and execute these recipes with precision; Part III jumps into code you can paste into your server, from a five-line “hello prompt” to a (probably, don't sue me!) SOX-compliant financial workflow; and Part IV hands you the production checklist—validation, caching, audit trails—so your prompts stay safe and fast when real users arrive. Let's dive-in!

Specification Note: This guide is based on the official & latest MCP Prompts specification (Protocol Revision: 2025-06-18). Where this guide extends beyond the official spec with patterns or recommendations, it is clearly marked.

Part I: Foundation - The "Why & What"

This section establishes the conceptual foundation. If a reader only reads this part, they will understand what Prompts are, why they are critically important, and where they fit in the ecosystem.

1. Core Concept

1.1 One-Sentence Definition

Prompts are reusable, user-initiated workflow templates that standardize complex interactions, giving the human complete authority to eliminate ambiguity and guarantee procedural integrity in AI systems.

1.2 The Core Analogy

Think of a Prompt as a "Recipe Card" in a smart kitchen.

- The World Before (Ambiguity): You tell a rookie chef, "Make a nice dinner." You might get a sandwich, a seven-course meal, or a burnt kitchen. The outcome is unpredictable because the instruction is vague. This is the state of most natural language interactions with AI—an "interpretation lottery."

- The World With Prompts (Certainty): You hand the chef a detailed recipe card for "Boeuf Bourguignon." It lists the exact ingredients (arguments like

patient_id), the precise steps (the workflow), and the expected outcome. The result is perfect and repeatable, regardless of the chef's mood or experience.

A Prompt is this recipe card. The user explicitly chooses the recipe (e.g., /fraud_triage), provides the specific ingredients (e.g., case_id="7F93"), and the MCP server executes the expert-designed workflow flawlessly.

1.3 Architectural Position & Control Model

Prompts are a server-side primitive whose execution is initiated by the user via the Host/Client.

+-----------+ 1. User initiates via slash command +-----------+

| | (e.g., /fraud_triage case_id="...") | |

| User +------------------------------------------------->| Host |

| Interface | | (Client) |

| |<-------------------------------------------------+ |

+-----------+ 4. Host renders final, structured result +-----------+

|

+---------------------------------------------------+

| 2. Host sends `prompts/get` request |

v | 3. Server returns

+-----------+ `GetPromptResult`

| | <--- Orchestrates Tools, Resources, etc. --> |

| MCP | |

| Server | |

| +----------------------------------------------+

+-----------+

The Control Model is the most critical aspect of Prompts:

- Control Type: User-Controlled (Explicit)

- Controlling Actor: The Human User

This is not a minor detail; it is the entire foundation of the primitive's value. The AI cannot decide to run a Prompt on its own. A human must explicitly initiate it. This hard architectural boundary is what makes the system auditable, secure, and compliant in high-stakes environments. It shifts control from unpredictable AI interpretation to deterministic human command.

1.4 What It Is NOT

- It is NOT a Tool: A Tool is an action the AI chooses to perform (e.g.,

query_database()). A Prompt is a workflow the user commands the system to execute (e.g.,/run_compliance_report). This distinction is the bedrock of MCP's security model. - It is NOT just a text template: A simple text template just substitutes variables. An MCP Prompt is an orchestration recipe that can dynamically fetch data, define Tool execution sequences, and embed security context based on user arguments.

2. The Problem It Solves

2.1 The LLM-Era Catastrophe: The Ambiguity Tax

The core problem Prompts solve is the Ambiguity Tax—the massive, recurring cost organizations pay when AI systems misinterpret vague, natural-language instructions. This isn't just an inconvenience; it's a catastrophic failure mode with documented, severe consequences.

- The "Before" State:

- Unpredictable Outcomes: A command like "Investigate this anomaly" can lead to wildly different results depending on the AI model, the time of day, or the phrasing. This turns critical operations into a lottery.

- Quantified Failures: This ambiguity directly contributes to a 73% project failure rate for AI integrations and introduces an 8-12 week delay for each new integration.

- Catastrophic Costs: In high-stakes domains, interpretation errors lead to expensive failures, such as invalidated clinical trials, undetected financial fraud, and missed safety flags in aviation.

2.2 Why Traditional Approaches Fail

- "Better Prompt Engineering": This approach attempts to solve ambiguity by writing more and more detailed instructions in natural language. It fails because it's a Band-Aid, not a cure. It's brittle (a model update can break it), un-auditable (the AI's reasoning is still a black box), and doesn't scale. You're still just hoping the AI understands.

- Custom API Wrappers: Teams build bespoke, hard-coded workflows for every task. This solves ambiguity for one task but creates the M×N integration crisis, leading to thousands of brittle, unmaintainable integrations and severe vendor lock-in.

Prompts solve both problems by providing a standardized, reusable, and user-controlled way to define and execute complex workflows with absolute certainty.

Part II: Technical Architecture - The "How"

This is the technical deep dive, detailing the protocol mechanics, message structures, and the end-to-end lifecycle. This section is the source of truth for implementers.

3. Protocol Specification

Prompts are managed through two primary JSON-RPC methods: prompts/list for discovery and prompts/get for execution. Servers supporting prompts MUST declare the prompts capability during initialization.

{

"capabilities": {

"prompts": {

"listChanged": true

}

}

}

The listChanged boolean indicates whether the server will emit notifications when its list of available prompts changes.

3.1 prompts/list: Discovering Available "Recipe Cards"

This method allows a client to ask the server, "What workflows are available for me to run?"

Response (ListPromptsResult):

{

"jsonrpc": "2.0",

"id": "request-id-123",

"result": {

"prompts": [

{

"name": "fraud_triage",

// Optional: A human-readable name for display in UIs.

"title": "Fraud Investigation Triage",

"description": "Expert fraud investigation workflow",

"arguments": [

{

"name": "case_id",

"description": "Unique case identifier",

"required": true

}

]

}

],

// Optional: A cursor to be used in the next request to fetch more results.

"nextCursor": "next-page-cursor"

}

}

Request:

{

"jsonrpc": "2.0",

"method": "prompts/list",

"params": {

// Optional: The cursor from a previous response to get the next page.

"cursor": "optional-cursor-value"

},

"id": "request-id-123"

}

3.1.1 Pagination Support

The prompts/list operation supports pagination for servers with many prompts:

- The request can include an optional

cursorparameter to retrieve a specific page of results. - The response can include an optional

nextCursorvalue. If present, clients can use this value in a subsequent request to retrieve the next page of prompts. - Clients SHOULD be designed to handle pagination to ensure they can correctly interact with servers that have a large number of available prompts.

3.2 prompts/get: Executing a Specific "Recipe"

This is the core method for invoking a prompt with user-provided arguments.

Request:

{

"jsonrpc": "2.0",

"method": "prompts/get",

"params": {

// The programmatic name of the prompt to execute.

"name": "fraud_triage",

// A key-value map of arguments provided by the user.

"arguments": {

"case_id": "7F93"

}

},

"id": "request-id-456"

}

Note: Arguments for prompts/get may be auto-completed through the completion API. See the MCP specification for details on argument completion.Response (GetPromptResult):

The server returns a structured set of messages, fully composed and ready for the LLM or application to process.

{

"jsonrpc": "2.0",

"id": "request-id-456",

"result": {

"description": "Fraud triage for case 7F93",

"messages": [

{

"role": "user",

"content": {

"type": "text",

"text": "FRAUD INVESTIGATION PROTOCOL - Case 7F93..."

}

}

]

}

}

3.2.1 Message Content Types

The content field within a PromptMessage can be one of several types to support rich, multi-modal interactions.

Embedded Resources: For referencing server-side resources directly.

{

"type": "resource",

"resource": {

"uri": "resource://example",

"name": "example",

"title": "My Example Resource",

"mimeType": "text/plain",

"text": "Resource content"

}

}

Audio Content: For including audio information.

{

"type": "audio",

"data": "base64-encoded-audio-data",

"mimeType": "audio/wav"

}

Image Content: For including visual information.

{

"type": "image",

"data": "base64-encoded-image-data",

"mimeType": "image/png"

}

Text Content: The most common type for natural language.

{

"type": "text",

"text": "The text content of the message"

}

Note on Annotations: All content types in prompt messages support optional annotations for metadata about audience, priority, and modification times.

3.3 notifications/prompts/list_changed

When a server declares listChanged: true in its capabilities, it SHOULD send a notification to the client whenever the list of available prompts changes (e.g., a new prompt is added or an old one is removed).

Notification:

{

"jsonrpc": "2.0",

"method": "notifications/prompts/list_changed"

// Notifications have no "id".

}

Clients receiving this notification should refresh their prompt list by making a new prompts/list request. This enables dynamic UIs that update in real-time without constant polling.

3.4 Error Handling

Prompts utilize standard JSON-RPC error codes. A client must be prepared to handle them gracefully.

| Code | Error | Description & Client Action |

|---|---|---|

-32601 |

Method not found | The server does not support the Prompts primitive. The client should disable prompt-related UI features. |

-32602 |

Invalid params | The request is malformed. This typically means a required argument was missing, or the name does not correspond to an available prompt. The client should inform the user about the missing information. |

-32603 |

Internal error | The server failed while trying to compose the prompt. This is a server-side issue. The client should display a generic error and suggest retrying later. |

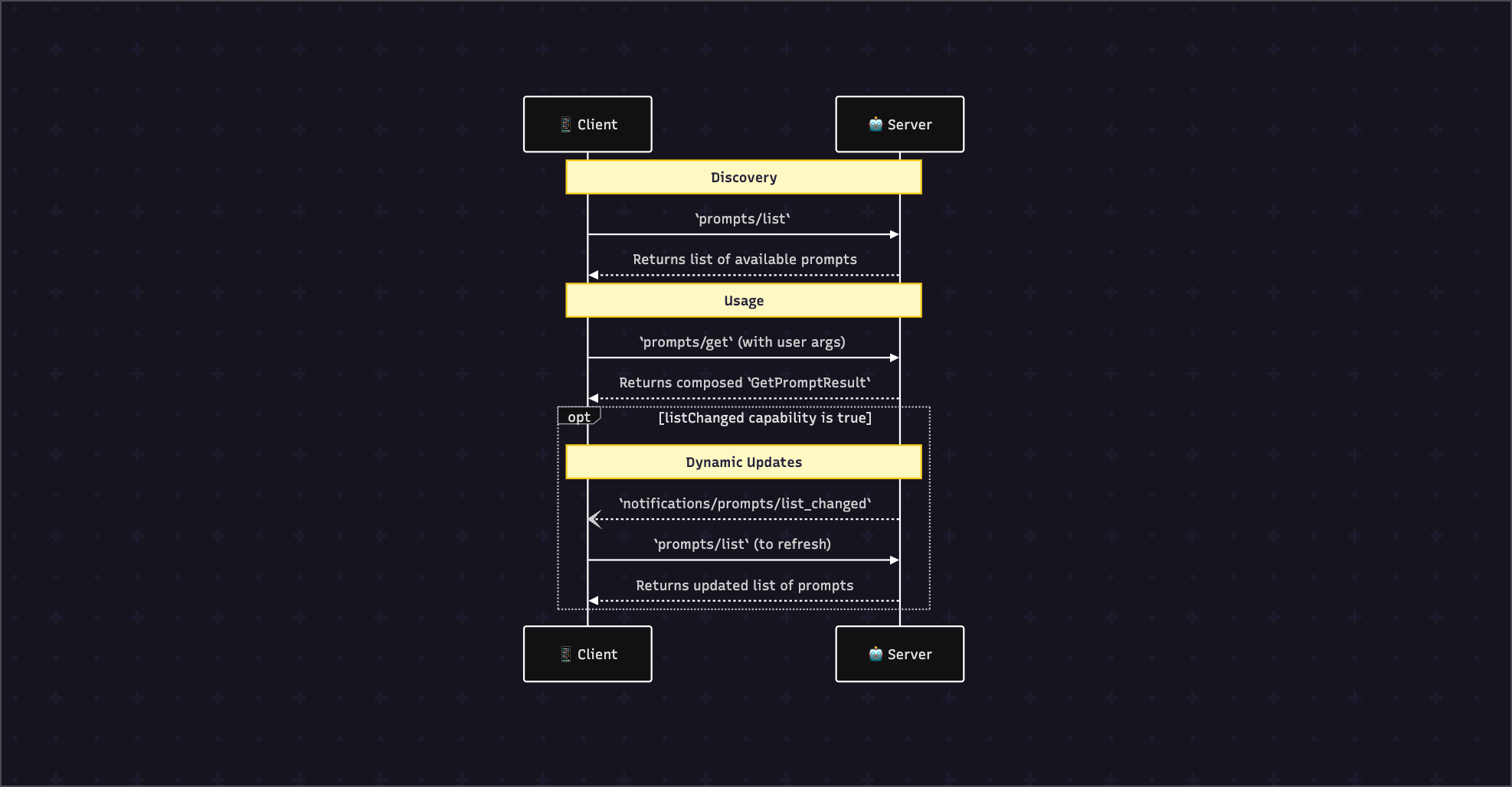

4. The Lifecycle & Message Flow

The interaction lifecycle follows a clear discovery and usage pattern.

Part III: Implementation Patterns - The "Show Me"

This section makes concepts concrete through a progression of code-heavy examples, from a simple "hello world" to a complex, production-ready workflow.

5. Pattern 1: The Canonical "Golden Path"

- Goal: To define and retrieve a simple, reusable prompt for a common developer task: code review.

- Code quality and best practices

- Potential bugs or security issues

- Suggested improvements

"""

}

}

- Key Takeaway: The server's primary job is to validate the user's request, fetch the correct template, safely substitute the arguments, and return a structured

GetPromptResult. This transforms a simple user command into a rich, contextual instruction.

Implementation: This example uses Python with the FastMCP framework for the server.Server-Side (server.py)

from mcp.server import FastMCP

from mcp.server.models import *

import re

app = FastMCP("prompts-server-simple")

# This dictionary acts as our prompt template registry.

PROMPTS = {

"code_review": {

"description": "Comprehensive code review with best practices",

"arguments": [

{"name": "code", "required": True},

{"name": "language", "required": False, "default": "Python"}

],

"template": """

Please review the following {{language}} code:

```{{language}}

{{code}}

Provide feedback on:@app.get_prompt()

async def get_prompt(name: str, arguments: dict = None) -> GetPromptResult:

"""Get a specific prompt with argument substitution"""

if name not in PROMPTS:

# Corresponds to a -32602 error if name is invalid.

raise ValueError(f"Unknown prompt: {name}")

prompt = PROMPTS[name]

template = prompt["template"]

# Basic validation for required arguments.

if arguments:

required = {arg["name"] for arg in prompt["arguments"] if arg.get("required")}

missing = required - set(arguments.keys())

if missing:

# This would also trigger a -32602 error.

raise ValueError(f"Missing required arguments: {missing}")

# Simple and safe template substitution.

for key, value in arguments.items():

# ⚠️ SECURITY CRITICAL: Always sanitize user-provided input.

safe_value = re.sub(r'[<>{}$`]', '', str(value))

template = template.replace(f"{{{{{key}}}}}", safe_value)

return GetPromptResult(

description=prompt["description"],

messages=[PromptMessage(role="user", content=TextContent(text=template))]

)

6. Pattern 2: The Compositional Workflow

Note: This example demonstrates how Prompts can orchestrate with other MCP primitives (Tools, Resources, Sampling). These are separate specifications within the MCP ecosystem. This pattern shows the conceptual power of combining primitives, but each primitive must be separately implemented and supported.

- Goal: To show how a Prompt acts as the "Conductor" of an orchestra, coordinating other primitives to handle a healthcare emergency.

- Key Takeaway: The power of Prompts multiplies when they orchestrate other primitives. The Prompt is not the end product; it's the blueprint for a complex, multi-step workflow that combines pre-defined procedure (the template), live data (Resources), and immediate action (Tools).

Implementation: This pattern demonstrates the server-side orchestration logic. The Prompt isn't just text; it's a plan of action.

import asyncio

class EmergencyOrchestrator:

def __init__(self, mcp_client):

self.mcp_client = mcp_client

async def get_emergency_response_prompt(self, arguments: dict) -> GetPromptResult:

patient_id = arguments.get("patient_id")

emergency_type = arguments.get("emergency_type")

# 1. PROMPT: Get the base protocol template. This is the starting recipe.

protocol_template_prompt = await self.mcp_client.get_prompt(

f"protocol_{emergency_type}",

{"patient_id": patient_id}

)

# 2. RESOURCES & TOOLS: In parallel, gather context and take action.

# The Prompt's logic orchestrates these primitives.

patient_data_task = self.mcp_client.read_resource(f"emr://{patient_id}/summary")

alert_tool_task = self.mcp_client.call_tool("alert_response_team", {"type": emergency_type})

patient_data, alert_confirmation = await asyncio.gather(

patient_data_task, alert_tool_task

)

# 3. COMPOSE: Enrich the base template with the live data.

final_prompt_text = f"""

**EMERGENCY RESPONSE PLAN: {emergency_type.upper()} for Patient {patient_id}**

{protocol_template_prompt.messages[0].content.text}

---

LIVE PATIENT DATA:

{patient_data.content}

---

ACTIONS TAKEN:

- {alert_confirmation['result']['message']}

"""

return GetPromptResult(

description=f"Emergency response for {patient_id}",

messages=[PromptMessage(role="user", content=TextContent(text=final_prompt_text))]

)

7. Pattern 3: The Domain-Specific Solution (Finance/SOX)

- Goal: To execute a financial data access prompt while ensuring Sarbanes-Oxley (SOX) compliance through segregation of duties checks and an immutable audit trail.

- Key Takeaway: For regulated industries, MCP Prompts are not just workflow tools but enforcement mechanisms for compliance. The server-side logic wraps the core prompt functionality with non-negotiable security and audit controls, transforming a technical primitive into a business-critical compliance solution.

Implementation: This server-side code wraps the prompt logic with SOX-specific controls.

# Assume these helper classes exist and are properly implemented.

from sox_controls import SegregationOfDutiesEngine, AuditTrailManager

from identity import UserContext, SOXViolationError

sod_engine = SegregationOfDutiesEngine()

audit_log = AuditTrailManager()

@app.get_prompt()

@requires_sox_authorization("financial_reporting") # A decorator to check user permissions

async def get_sox_financial_report_prompt(

name: str,

arguments: dict,

user_context: UserContext

) -> GetPromptResult:

"""

A SOX-compliant prompt that enforces controls before execution.

"""

# ⚠️ SECURITY CRITICAL: Enforce Segregation of Duties (SOX Section 302/404).

# An analyst who generates a report cannot also be the one who approves it.

sod_check = await sod_engine.check_compliance(

user=user_context,

action=f"execute_prompt_{name}",

related_financial_actions=await get_user_recent_actions(user_context)

)

if not sod_check.compliant:

await audit_log.record_sox_violation(

user=user_context,

violation="sod",

details=sod_check.violation_details

)

raise SOXViolationError(f"Segregation of Duties violation: {sod_check.violation_details}")

# If controls pass, proceed with standard prompt logic.

report_template = "Generate financial report for quarter {{quarter}}..."

# ... (argument substitution logic) ...

final_prompt_text = report_template.replace("{{quarter}}", arguments["quarter"])

# Create an immutable, legally-defensible audit record (SOX requirement).

await audit_log.record_financial_access(

user=user_context,

prompt_name=name,

arguments=arguments,

data_accessed=["quarterly_revenue", "expense_reports"],

control_executed="SegregationOfDutiesCheck",

timestamp=datetime.utcnow()

)

return GetPromptResult(

description="SOX-compliant financial report prompt",

messages=[PromptMessage(role="user", content=TextContent(text=final_prompt_text))]

)

Part IV: Production Guide - The "Build & Deploy"

8. Server-Side Implementation

8.1 Core Logic & Handlers

This is a complete, runnable server using Python and FastMCP.

# main.py

from mcp.server import FastMCP, StreamableHTTPTransport

from mcp.server.models import *

import re

# Minimal capability declaration (per spec):

capabilities = {

"prompts": {

"listChanged": True

}

}

# Full server initialization (implementation-specific):

app = FastMCP(

server_info=ServerInfo(name="production-prompts-server", version="1.0.0"),

capabilities=ServerCapabilities(prompts=PromptCapabilities(listChanged=True))

)

# In production, this would come from a database or configuration service.

PROMPT_REGISTRY = { /* ... as defined in Pattern 1 ... */ }

@app.list_prompts()

async def list_prompts() -> List[PromptSchema]:

# ... (Implementation from previous section) ...

pass

@app.get_prompt()

async def get_prompt(name: str, arguments: dict = None) -> GetPromptResult:

# ... (Implementation from Pattern 1, including validation and sanitization) ...

pass

async def main():

# Use StreamableHTTPTransport for production networked services.

transport = StreamableHTTPTransport(port=8080)

await app.connect(transport)

if __name__ == "__main__":

import asyncio

asyncio.run(main())

8.2 Production Hardening

Observability: Log user intentions for business context.

import structlog

logger = structlog.get_logger()

logger.info("prompt_executed", prompt_name=name, user_id=user_context.id)

Performance: For frequently accessed prompts, use caching.

from functools import lru_cache

@lru_cache(maxsize=256)

def get_cached_prompt_composition(name: str, args_tuple: tuple):

# ... logic to compose prompt ...

return composed_prompt_result

Security: Always sanitize inputs. Never trust arguments from the client.

# ⚠️ SECURITY CRITICAL: Basic Input Sanitization

def sanitize_input(value: str) -> str:

sanitized = re.sub(r'[<>{}$`]', '', value)

return sanitized[:2048] # Limit length to prevent abuse.

9. Client-Side Implementation

9.1 Core Logic & State Management

A client needs to discover available prompts and then allow the user to execute them.

// Example TypeScript client logic

import { createMcpClient, McpClient, PromptSchema, GetPromptResult } from "@modelcontextprotocol/sdk";

class PromptFeature {

private client: McpClient;

public availablePrompts: PromptSchema[] = [];

constructor(client: McpClient) {

this.client = client;

// Listen for changes to the prompt list

this.client.on("notifications/prompts/list_changed", () => {

console.log("Prompt list changed, refreshing...");

this.initialize();

});

}

async initialize() {

try {

// In a real app, handle pagination here

const response = await this.client.prompts.list();

this.availablePrompts = response.prompts;

} catch (error) {

console.error("Failed to list prompts:", error);

}

}

async execute(name: string, args: Record<string, any>): Promise<GetPromptResult> {

// ... (validation logic as shown in previous section) ...

return this.client.prompts.get({ name, arguments: args });

}

}

9.2 User Experience (UX) Patterns

⚠️ Note: Extension Pattern - The Elicitation pattern described here is NOT part of the official MCP specification. It's a proposed pattern for handling incomplete commands that could be implemented at the application layer.Handling Incomplete Commands: A robust application shouldn't just show a protocol error. It can use the schema from prompts/list to build an interactive form.User: /run_report

Client Logic: Sees run_report is a valid prompt but required arguments report_type and date_range are missing.

UI Renders:

-----------------------------------

| Please complete your report request:

|

| Report Type: [Dropdown: Sales, Inventory]

| Date Range: [Date Picker]

|

| [ Submit ]

-----------------------------------

Slash Command Interface: This is the canonical UX pattern. It's fast, discoverable, and clearly separates commands from conversation.

Chat Input: /fraud_triage <case_id> [cluster_id]

(UI shows autocomplete based onprompts/listresult)

Part V: Guardrails & Reality Check - The "Don't Fail"

10. Decision Framework

10.1 When to Use This Primitive

| If you need to... | Then use Prompts because... | An alternative would be... |

|---|---|---|

| Enforce a multi-step, standard operating procedure. | They provide guaranteed procedural integrity and an immutable workflow. | Tools, but this offloads complex orchestration logic to the LLM, risking deviation. |

| Eliminate ambiguity in user commands. | The user-controlled slash command model has zero interpretation. | Natural language, but this invites the Ambiguity Tax and unpredictable results. |

| Scale expert knowledge across an organization. | They codify expertise into reusable, democratized templates. | Documentation and training, which is slow, expensive, and doesn't prevent human error. |

| Create a legally-defensible audit trail. | The execution path is deterministic from user command to result. | Logging LLM conversations, which is not legally defensible as it can't prove user intent, only the AI's interpretation. |

10.2 Critical Anti-Patterns (The "BAD vs. GOOD")

- Anti-Pattern: Conflating Prompts and Tools (Embedding Logic in the Template)

- BAD 👎: The prompt template itself tries to contain complex conditional logic.

- Why it's wrong: This is brittle, hard to maintain, and violates separation of concerns.

- GOOD 👍: The server-side orchestration logic handles the conditions and composes the final template.

- Anti-Pattern: Ignoring

prompts/listand Hard-Coding Prompts- BAD 👎: The client has a hard-coded list of available prompts and their arguments.

- Why it's wrong: The client will break as soon as the server adds, removes, or changes a prompt. It defeats the purpose of a dynamic protocol.

- GOOD 👍: The client calls

prompts/liston startup and listens forlist_changednotifications to keep its UI perfectly in sync with the server's capabilities.

- Anti-Pattern: Treating Prompts as Simple String Formatting

- BAD 👎: The server just does a basic

template.format(**args)without any validation, sanitization, or contextual enrichment. - Why it's wrong: This misses the entire point of orchestration and opens up massive security holes (e.g., template injection).

- GOOD 👍: The server handler is a rich orchestration layer that validates, sanitizes, fetches data from Resources, and composes a response that is far more than the sum of its parts.

- BAD 👎: The server just does a basic

11. The Reality Check

11.1 What This Guide Adds Beyond the Official Spec

The following patterns and recommendations in this guide extend beyond the official MCP specification to provide practical, real-world implementation advice:

- The Elicitation pattern for handling incomplete commands (this is a recommended application-layer pattern).

- The detailed SOX compliance example (a domain-specific implementation of the security requirements).

- Caching strategies and observability patterns (production best practices).

- The multi-primitive orchestration pattern (a conceptual model for using multiple MCP specifications together).

11.2 Security Requirements

Per the MCP specification:

- Implementations MUST carefully validate all prompt inputs and outputs.

- Prevention of injection attacks is required.

- Unauthorized access to resources (especially when using embedded resources) must be prevented.

The following are strongly recommended best practices for meeting these requirements:

- Input Sanitization: As shown in the code examples, all user-provided data in

argumentsmust be sanitized before being used in templates or downstream systems. - Access Control: The protocol does not define an authorization model. Your server is responsible for implementing robust access control to ensure a user has the necessary permissions to execute a given prompt.

- Compliance Logic: For regulated industries, the server is responsible for embedding all necessary compliance checks (like the SOX example) into the prompt orchestration logic.

Never assume the protocol will protect you. It provides the tools for building secure systems, but the responsibility for security rests entirely with the server implementation.

Part VI: Quick Reference - The "Cheat Sheet"

12. Common Operations & Snippets

Server: Define a prompt schema (Python)

PROMPT_SCHEMA = {

"name": "my_prompt",

"title": "My Awesome Prompt",

"description": "...",

"arguments": [

{"name": "arg1", "required": True},

{"name": "arg2", "required": False}

]

}

Client: Execute a prompt with arguments

const result = await mcpClient.prompts.get({

name: "code_review",

arguments: { code: "...", language: "typescript" }

});

Client: List all available prompts

const availablePrompts = await mcpClient.prompts.list();

13. Configuration & Error Code Tables

prompts/list Parameters (params)

| Parameter | Type | Default | Description |

|---|---|---|---|

cursor |

string | null |

The pagination cursor from a previous response. |

prompts/get Parameters (params)

| Parameter | Type | Required | Description |

|---|---|---|---|

name |

string | Yes | The programmatic name of the prompt to execute. |

arguments |

object | No | A key-value map of arguments to substitute into the template. |

Error Code Reference

| Code | Meaning | Recommended Client Action |

|---|---|---|

-32601 |

Method Not Found | Disable prompt features in the UI. |

-32602 |

Invalid Params | Prompt the user for missing required arguments or inform them the command is invalid. |

-32603 |

Internal Error | Display a generic error message and suggest retrying. |